Predicting Insurance CLV: Models That Drive Retention

An applied guide to CLV modeling for insurers—and how to turn it into saves. Customer Lifetime...

Quantify hidden workflow waste and apply AI to reclaim time and margin.

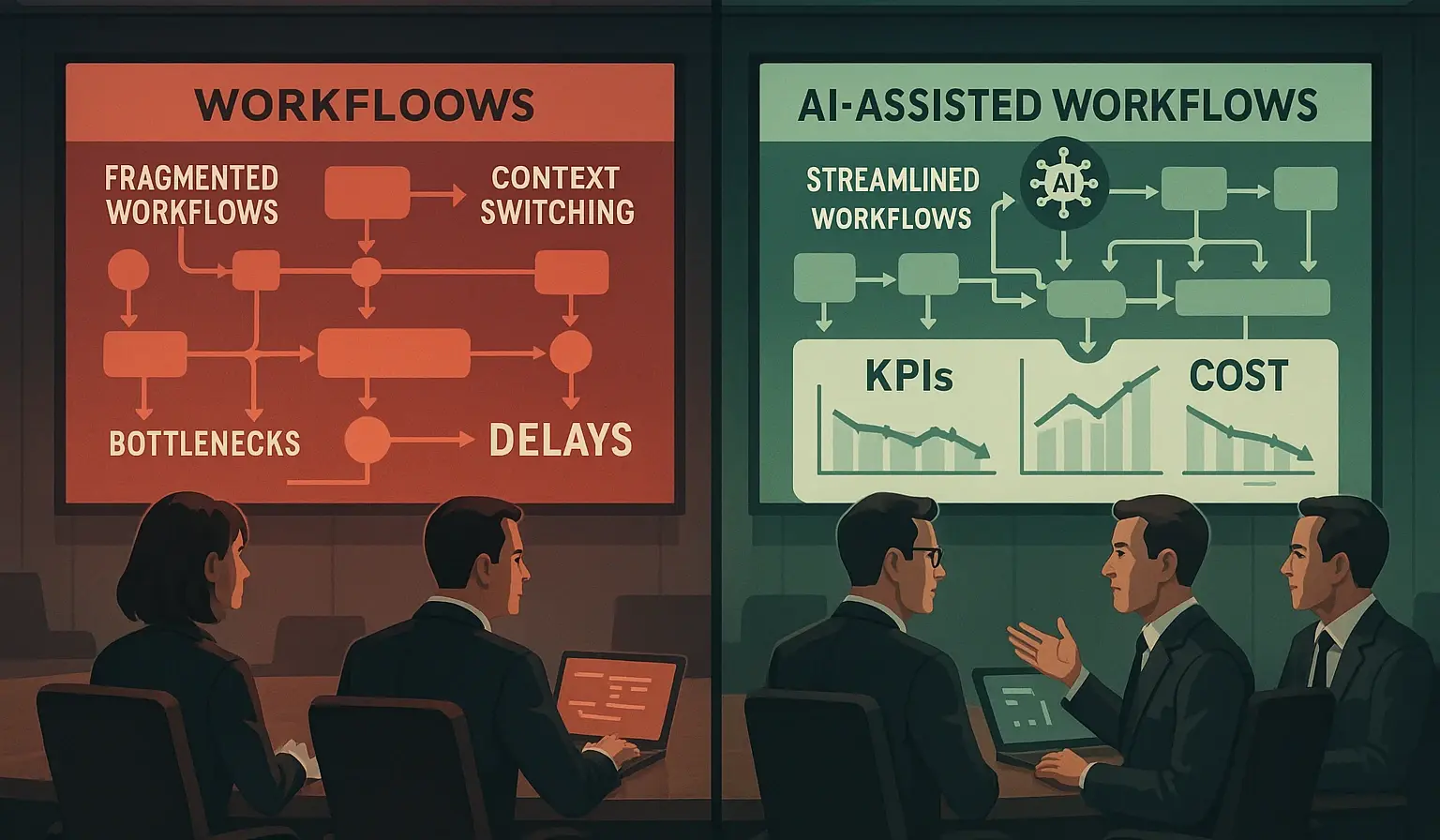

Most enterprises don’t lack effort—they leak it. Busywork, context switching, manual reconciliations, and wait states quietly tax margins and morale. The challenge is that this waste hides in plain sight, scattered across apps and teams.

Start by making it measurable. Time‑motion studies and value‑stream mapping reveal where work queues pile up and handoffs stall. Anatomy‑of‑work research finds that knowledge workers can lose a full day per week to coordination overhead and tool toggling; see Asana. Slack’s State of Work snapshots echo the penalty of fractured workflows and constant pings on productivity (Slack). Convert anecdotes into data. Instrument cycle times, queue depths, rework rates, and exception rates within your core processes—claims, onboarding, order-to-cash, or support.

Track context switches and manual touches per case. Build a cost model that translates delay and rework into dollars: labor cost, error risk, lost revenue, and customer churn. Operations leaders that quantify at this granularity can prioritize ruthlessly, fund change with confidence, and resist “automation theater.”

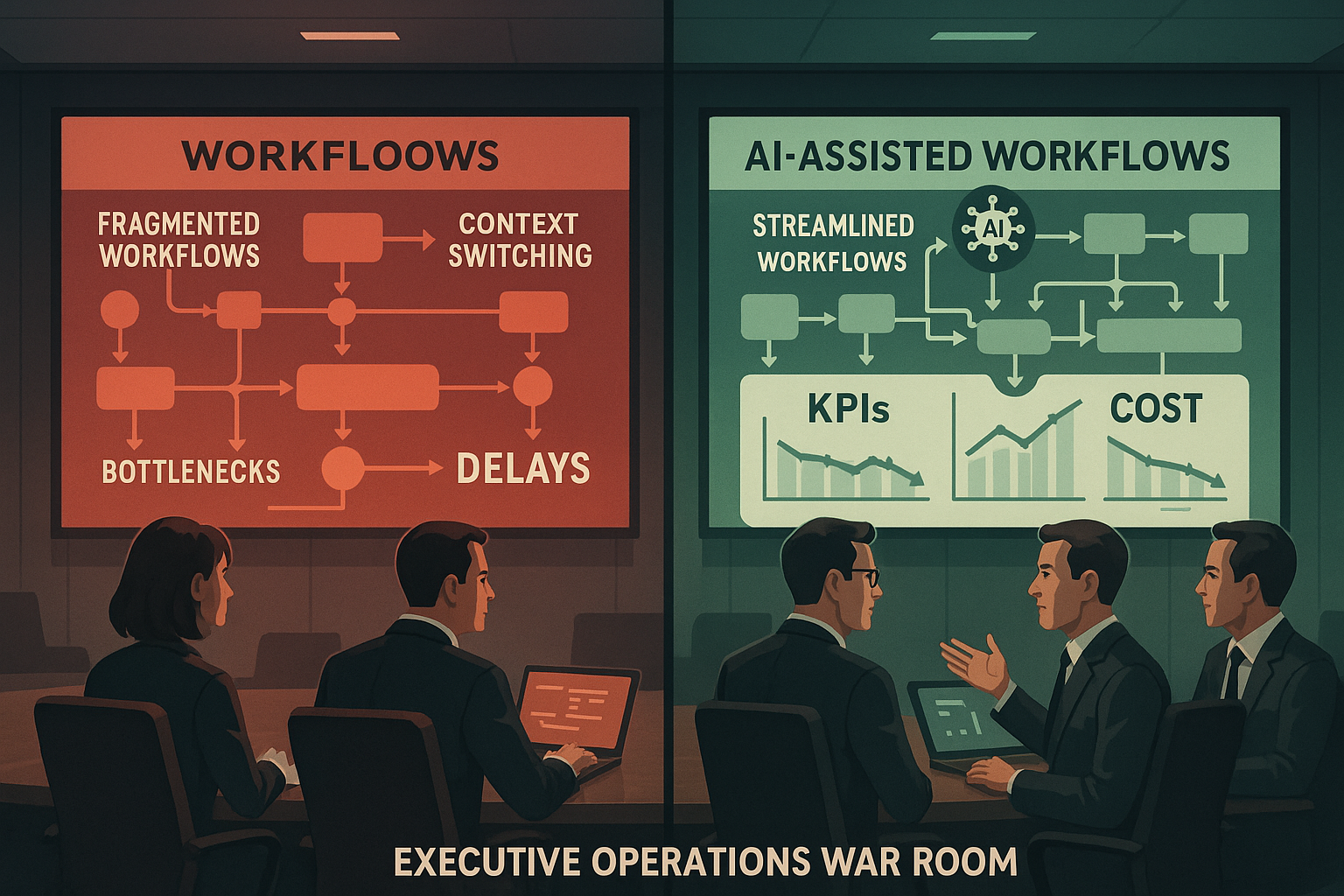

Finally, ground the analysis in customer impact. Long handle times and opaque status updates erode trust. Public benchmarks and case libraries show that organizations applying automation and AI to the right bottlenecks compress cycle times and raise satisfaction; McKinsey’s operations insights provide directional ranges and patterns to watch (McKinsey).

Once you can see the waste, target the knots where AI has leverage. High‑leverage patterns repeat across MapleSage’s ICPs: - Insurance claims: AI agents can classify intake, route by complexity, summarize adjuster notes, and trigger status updates—reducing manual touches and inbound calls.

Case write‑ups show claims cycle times shrink materially when triage and communication are automated (e.g., Ricoh). - SaaS customer ops: surface churn risk from usage and sentiment, draft outreach, and escalate to humans with context packs.

This turns reactive firefighting into proactive saves. - Back‑office reconciliation: document understanding and policy‑aware agents move first‑pass checks from humans to machines, reserving experts for exceptions. Avoid the rip‑and‑replace trap. Keep deterministic RPA for low‑variance steps and layer AI agents at decision nodes where context matters.

This hybrid preserves uptime while unlocking judgment. Blue/green and canary deployment patterns let you introduce AI safely; practical primers explain how to deploy without disrupting operations (Harness). Reliability requires observability: if you can’t see it, you can’t scale it. Treat golden signals—latency, error rate, saturation, throughput—as first‑class, and pair them with business KPIs (NPS, AHT, cost‑to‑serve). Accessible overviews help non‑SRE leaders grasp why observability pays (Splunk).

Governance must be built‑in. Classify data, minimize PII, and restrict agent scopes by region and purpose. The NIST AI RMF provides a risk‑based control set you can apply without slowing teams down.

Evidence keeps transformations honest. Define service level objectives (SLOs) for both business and technical outcomes before you ship: cycle time, first‑contact resolution, claim adjudication speed, net revenue retention; and for the stack: latency, availability, and quality/error budgets.

Run canary cohorts with stop‑loss thresholds and instant rollback paths. Attribute impact at the journey‑node level—e.g., “claim status update on day 3” or “onboarding blocker removal at week 1”—so you don’t mistake channel noise for value. Make experiments routine. Randomized control is the gold standard; where it’s not feasible, use quasi‑experimental designs (difference‑in‑differences, matched cohorts) and pre‑register thresholds.

Publish monthly value realization reviews that reconcile incremental lift with cost and risk. This cadence separates durable gains from novelty spikes. Reference cases point to sustained savings and satisfaction lifts when organizations marry disciplined measurement to targeted automation; see industry stats roundups on workflow automation’s impact (Feathery).

Finally, design the operating model: a cross‑functional council spanning operations, data, security, and legal; clear RACI; and a backlog that treats automation as a product. With these habits—and a privacy‑first posture—ops leaders turn hidden costs into visible wins, compounding margin while improving customer experience.

A practical guide to evolving from RPA to intelligent, agentic automation.