A practical guide to evolving from RPA to intelligent, agentic automation.

Limits of RPA and when it still shines in 2025

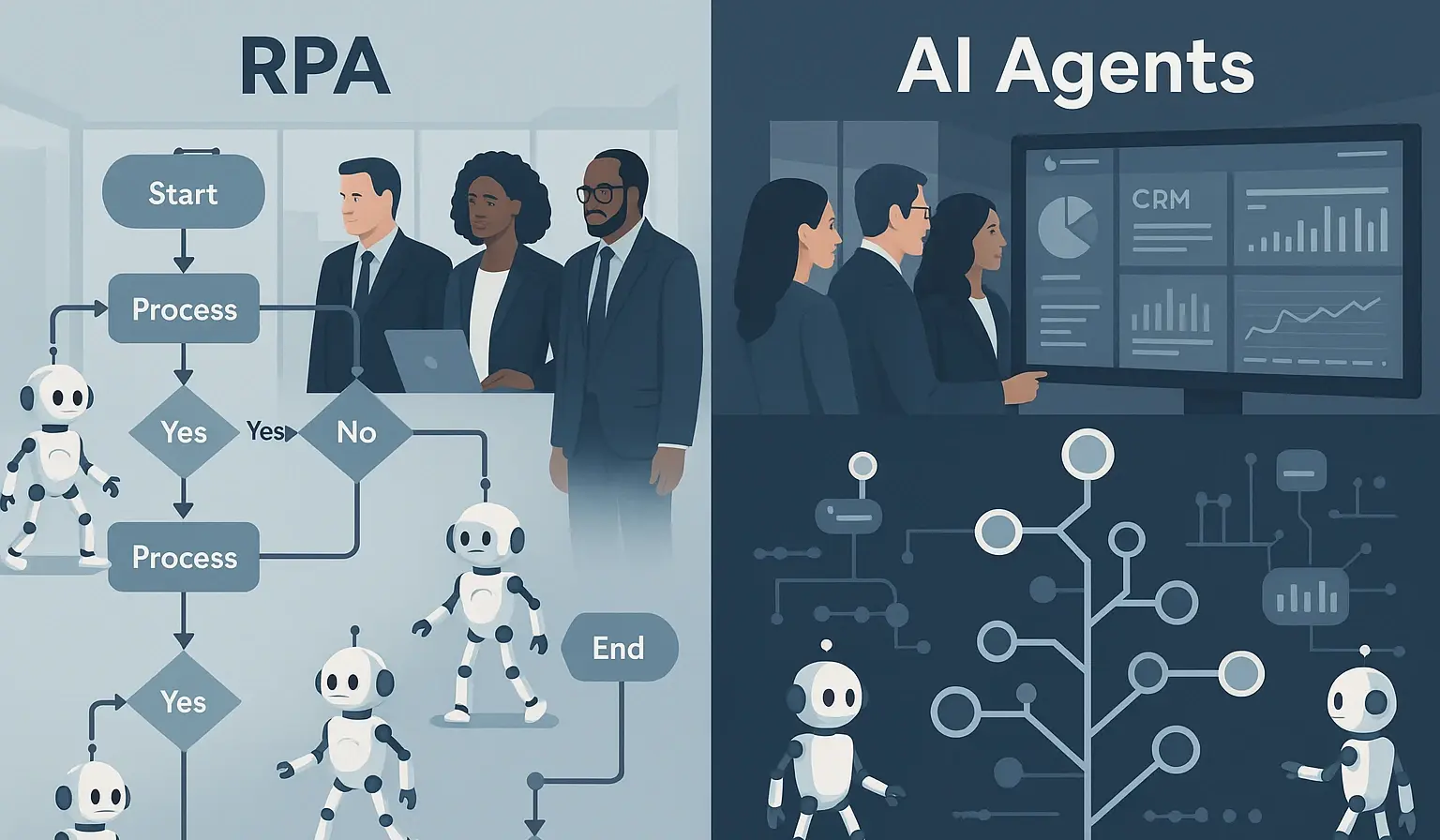

Robotic Process Automation (RPA) earned its place by taming repetitive, rules-based tasks—form fills, simple reconciliations, and swivel-chair integrations. In 2025, it still shines where inputs are structured, exceptions are rare, and process variance is minimal. For many enterprises, RPA stabilized brittle workflows and delivered quick wins without re-architecting core systems. But RPA struggles under uncertainty: unstructured documents, multi-step decisions, policy nuance, and evolving business rules increase exception rates and maintenance costs.

As processes span CRMs, policy admin, ERPs, and support platforms, rule-only bots reveal a ceiling. That ceiling is why leaders are augmenting or replacing pure RPA with intelligent automation and agentic AI. Independent overviews of deployment patterns show that zero-downtime techniques—blue/green, rolling, and canary—are essential as teams iterate on smarter automations in production.

Practical primers from HashiCorp and Harness make these strategies accessible for ops and IT (HashiCorp; Harness). And because reliability without visibility is luck, observability guidance from Splunk offers a crisp starting point (Splunk). In short, RPA remains useful—but the center of gravity is shifting toward systems that can perceive, decide, and act with guardrails.

Intelligent agents: design patterns, controls, and integration

Intelligent agents differ from RPA in three ways: perception (they can interpret text, voice, or images and fetch relevant context), decisioning (they weigh evidence against policy to choose among actions), and learning (they update playbooks via experimentation and feedback). Architecturally, treat the agent as a first-class microservice: versioned APIs, explicit scopes, and robust safety rails.

Start the agent in “advisory mode,” where it suggests actions and drafts communications, then graduate to supervised execution for narrow, low-risk steps, and only later to autonomy within tightly bounded scopes. This staircase approach is especially important in regulated industries.

Design patterns that work at enterprise scale include:

a) orchestration layers that mediate between agents and systems of record (CRM, ERP, policy admin),

b) semantic retrieval boundaries to avoid data leakage in knowledge lookups,

c) feature flags and kill switches for agent behaviors, and

d) immutable logs of prompts, retrieved evidence, actions, and outcomes.

For governance, align controls to the NIST AI Risk Management Framework and ISO/IEC 42001, mapping each use case to a risk tier with corresponding testing depth (NIST; ISMS.online).

In Customer Service and claims handling, Gartner’s use-case catalogs help separate durable patterns (agent assist, knowledge deflection, guided workflows) from hype (Gartner).

Migration roadmap, SLAs, and value measurement

A pragmatic migration roadmap minimizes risk and maximizes learning:

- Inventory and segment processes: keep RPA where variance is low; target agents where context and judgment matter (claims triage, complex escalations, entitlement checks, churn-risk outreach).

- Establish SLAs/SLOs for both business and technical outcomes—cycle time, accuracy, customer satisfaction, and error budgets for latency and availability.

- Pilot with shadow mode, then supervised actions in canary cohorts. Use cost and quality guardrails; roll back fast if thresholds are breached.

- Instrument the full pipeline and measure incremental value via controlled experiments. Focus on “decisions changed” and “cost-to-serve reduced,” not just activity counts.

External references can help benchmark expectations: McKinsey documents significant savings and cycle-time compression where AI augments core operations (McKinsey).

For security and change control, adopt “governance as code” and periodic audits mapped to ISO 42001/NIST; TrustCloud’s and Vanta’s guides offer practical checklists (TrustCloud; Vanta).

For SageSure’s ICP, the win isn’t replacing everything at once—it’s layering intelligence atop stable automation, preserving uptime, and using evidence to scale what works.