Hybrid Automation: Designing RPA + AI Agents That Scale

A pragmatic blueprint for combining RPA with agentic AI—safely and measurably.

A pragmatic blueprint for combining RPA with agentic AI—safely.

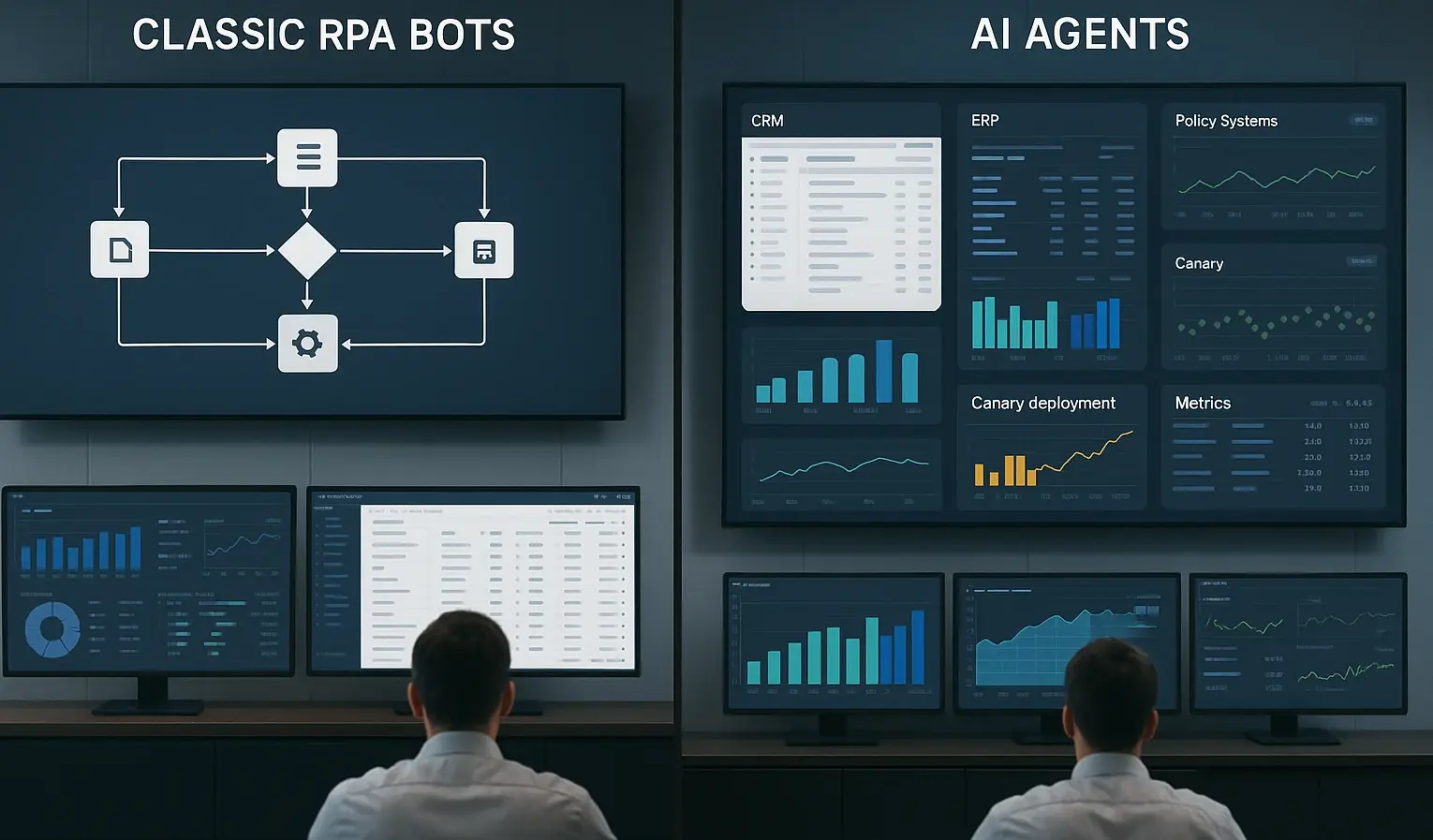

RPA earned its keep by automating repetitive, rules‑based work—form fills, reconciliations, and copy‑paste between systems without APIs. You should keep it where inputs are structured, variance is low, and exceptions are rare. But as processes cross CRM, ERP, policy admin, and custom apps, rules alone hit a ceiling: document ambiguity, policy nuance, and multi‑step decisions balloon exception rates and maintenance costs. That’s where agentic AI extends the stack.

Agents perceive (interpret text, images, or conversation), decide (weigh evidence against policy), and act (take the next step with context), closing the gap between “automation” and “outcome.” The goal isn’t to rip and replace. It’s to layer intelligence where judgment matters while preserving the uptime you’ve earned with RPA. Start by inventorying candidate workflows and segment them by variance and risk.

Keep RPA for low‑variance steps (e.g., deterministic data entry) and place agents at decision nodes (eligibility checks, exception routing, high‑value outreach). In regulated contexts like insurance and financial services, introduce agents in advisory or supervised modes before autonomy. Map each step to a risk tier and decide how humans remain in command for high‑stakes actions. Vendor hype aside, independent best‑practice guides emphasize progressive delivery for AI changes. Blue/green and canary strategies reduce blast radius as you introduce agent capabilities. See HashiCorp and an approachable explanation for practitioners from Harness.

Treat agents like first‑class microservices. Define stable, versioned APIs; enforce least‑privilege access; and codify policy checks at the edge. Mediate interactions through an orchestration layer instead of wiring agents directly into brittle legacy workflows. That layer should handle: authentication and scoped tokens; semantic retrieval boundaries for knowledge lookups; decision logging; idempotent execution; and backoff/retry/circuit breakers. This design isolates change, enables shadow mode, and lets you scale traffic gradually via feature flags.

Observability is your safety net. If you can’t see it, you can’t scale it. Instrument the full path from signal to action with distributed tracing, structured logs, and metrics dashboards. Track golden signals—latency, error rate, saturation, throughput—and add cost and quality gauges (token spend, approval rate, human‑override rate). Accessible primers make the benefits concrete for ops leaders; see Splunk.

Maintain an immutable decision log that captures prompts, retrieved evidence, policies applied, and downstream effects. Security and privacy must be designed in from day one. Implement allow/deny lists for systems, fields, and actions; mask PII at ingestion; and gate higher‑risk actions behind approvals. Keep model and prompt versions tied to releases, and run automated checks for sensitive data exposure. Align governance with the NIST AI RMF and ISO/IEC 42001 (overview at ISMS.online).

Adopt a migration playbook that respects uptime and the business calendar: 1) Shadow mode: agents read and recommend only. Compare recommendations against current outcomes to build confidence and calibrate metrics. 2) Supervised execution: enable a narrow set of low‑risk actions behind feature flags for a small cohort (canary). Define stop‑loss thresholds and instant rollback paths. 3) Phased autonomy: expand to repetitive, mid‑value tasks with clear policies.

Keep humans in command for high‑stakes actions. 4) Scale and optimize: rotate models and prompts, run controlled experiments, and refresh policies as regulations or products change. Define both technical and business SLAs/SLOs. Technical: latency, availability, freshness, and quality. Business: cycle time, accuracy, CSAT/NPS, cost‑to‑serve, and revenue or loss‑ratio impact. Make value realization explicit with randomized control or strong quasi‑experimental designs; release only when confidence bounds clear hurdle rates.

Progressive delivery references offer step‑by‑step patterns to ship AI changes without disruption: HashiCorp and Harness. Finally, socialize the operating model. Train teams, publish decision playbooks, and set clear escalation paths. Treat automation as a product with a backlog and owners. With this discipline, operations leaders evolve from brittle scripts to resilient, learning systems—achieving measurable ROI without breaking what already works.

A pragmatic blueprint for combining RPA with agentic AI—safely and measurably.

How CDPs and AI agents fuse to deliver real-time, privacy-first personalization.