Personalization Payback: The Real ROI of AI at Scale

A CFO-ready framework to target, test, and scale AI personalization profitably. Personalization...

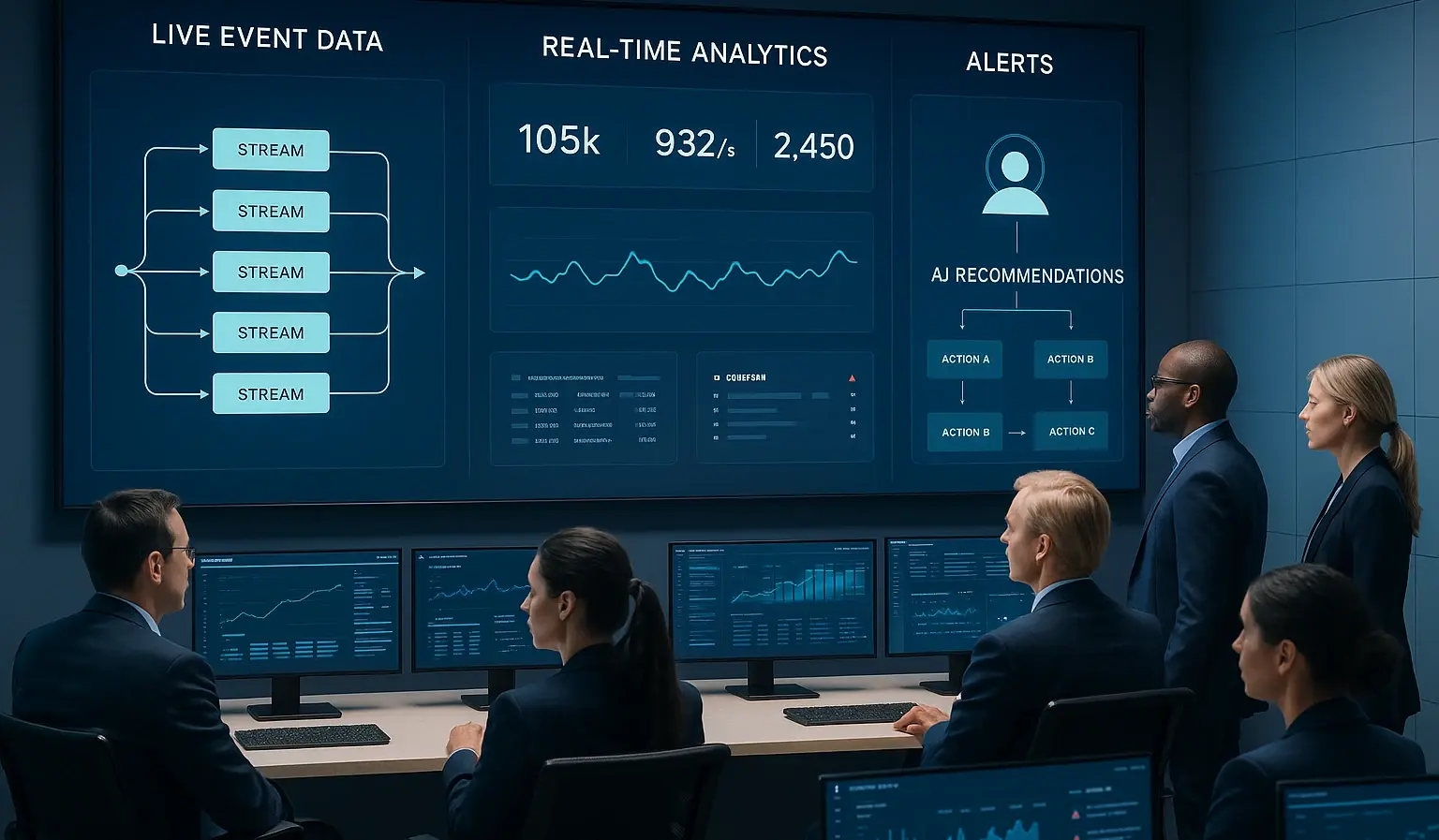

How real-time analytics and AI shift decisions from reports to results.

Most enterprises have gotten very good at analyzing yesterday. Data warehouses, BI dashboards, and weekly reviews help leaders see trends—after the fact. Real-time analytics and AI change the cadence. When events stream in continuously and models can evaluate context on the fly, decisions move from reactive reports to proactive interventions.

The difference shows up in cycle times, customer experiences, and cost-to-serve. In insurance, a claims status anomaly can trigger same‑day outreach that prevents a complaint. In SaaS, usage cliffs can be flagged and addressed before a renewal risk hardens. In retail, inventory-aware recommendations can steer demand away from out-of-stock items in the moment.

Real-time also reshapes measurement: instead of inspecting performance monthly, teams can observe effects within minutes and iterate faster. Real-time does not mean “everything, everywhere, all at once.” It means choosing which moments are economically meaningful and time-sensitive.

McKinsey’s work on next-best-experience emphasizes prioritizing decision points that truly change outcomes—renewal nudges, onboarding milestones, claims transparency—rather than chasing vanity interactions; see McKinsey. The core pattern is event-driven: product, channel, and system events stream into an operational store; an AI decision layer retrieves just enough context (with consent) and selects an action; activation systems execute and write telemetry back. Done right, this becomes a closed loop that compounds learning.

Real-time elevates governance from an afterthought to an invariant. Decisions that happen in seconds still need auditability, safety rails, and privacy. The NIST AI RMF provides a risk-based vocabulary that can be mapped onto fast decision systems. Consent travels with the data; purpose limitation is checked at activation; and human-in-the-loop is enforced for higher-risk actions. This alignment is what allows MapleSage to deploy agentic AI in regulated contexts—keeping the focus on results without compromising trust.

Speed emerges from architecture as much as algorithms. The backbone is streaming data: instrument your apps and systems to emit events (e.g., Kafka topics) that are schema‑managed and quality-checked. For a concise overview of the pattern, see Confluent. Cloud providers offer reference designs for streaming ETL, feature stores, and online serving—for example, Google Cloud.

On top of the stream, you need a decision layer capable of simple rules for most moments and selective models where the surface is complex (propensity, uplift, eligibility). Stream processors like Apache Flink help aggregate features and detect patterns with low latency, while a compact online feature store ensures models read consistent inputs. Data minimization is a performance and a privacy feature. Most real-time actions do not need the whole profile—only a small context bundle. Enforce least‑privilege retrieval, field allow/deny lists, and region-aware access. Log every decision with rationale and evidence; those logs power both optimization and compliance.

Architecturally, treat AI agents like microservices: versioned APIs, feature flags, canary rollouts, and kill switches. This allows you to ship changes without downtime and to roll back instantly if metrics degrade. Finally, design observability in. Distributed tracing reveals where latency hides; metrics capture golden signals (latency, error rate, saturation, throughput) and business KPIs (conversion, NPS, cycle time). With proper tracing and logs, product, data, and risk teams can speak the same language about why a decision fired, what it used, and whether it helped. That shared visibility is how real-time moves from fragile demos to durable value.

Not every decision deserves real-time treatment. The payoff concentrates where timeliness changes outcomes, volumes are high enough to matter, and actions are both available and measurable.

High payback examples for MapleSage’s ICPs:

- Insurance: claims status transparency, fraud triage flags, and renewal‑window outreach. Faster updates reduce calls and complaints; selective interventions protect NPS and retention. Benchmarks show that real-time decisioning can materially improve satisfaction and cycle times when built on clean, connected data (see McKinsey).

- SaaS: onboarding blockers, usage anomaly detection, and executive‑sponsor engagement. Acting within hours—rather than weeks—prevents churn cascades.

- Retail: inventory‑aware recommendations and service recovery. Steer demand away from stockouts and resolve friction before it escalates. Lower payback areas include infrequent, high‑judgment decisions with long feedback cycles (e.g., annual pricing strategy) where batched analysis and workshops still rule.

The discipline is to match decision velocity to business value. To operationalize proof, run canary rollouts and instrument the full pipeline. Measure the funnel from trigger to outcome: event rate, decision fire rate, action execution, and converted outcomes. Attribute lift at the journey‑node level rather than the channel. Maintain stop‑loss thresholds and instant rollback paths. With this rigor—and privacy‑first governance via the NIST AI RMF—real-time AI becomes a reliable lever, not a risky stunt. As your telemetry and tests compound, so do your returns, turning “faster data” into demonstrably better decisions.

A CFO-ready framework to target, test, and scale AI personalization profitably. Personalization...

How FNOL automation cuts cycle times, lifts CX, and reduces cost.

Claims leaders feel the pain of...